Introduction

Building on our previous guide, How to Deploy Highly Available Ceph on Proxmox, we aimed to create a more resilient and disaster-recoverable storage infrastructure. This led to the creation of this guide: How to Deploy a Multi-Site Ceph Object Gateway.

Ceph Multisite Object Gateway enables replication and synchronization of S3-compatible storage across multiple clusters, ensuring geo-redundancy, disaster recovery, and load balancing. It maintains data consistency across locations and supports failover.

This guide covers deploying a multi-site Ceph RADOS Gateway (RGW) by configuring realms, zonegroups, and zones, setting up synchronization, and managing failover.

This guide assumes that you have already deployed a Ceph cluster and a RADOS gateway. If not refer to our guide: How To Deploy Highly Available Ceph on Proxmox.

By the end, you’ll have a distributed object storage solution capable of replicating data across Ceph clusters.

Understanding Ceph Multisite Architecture

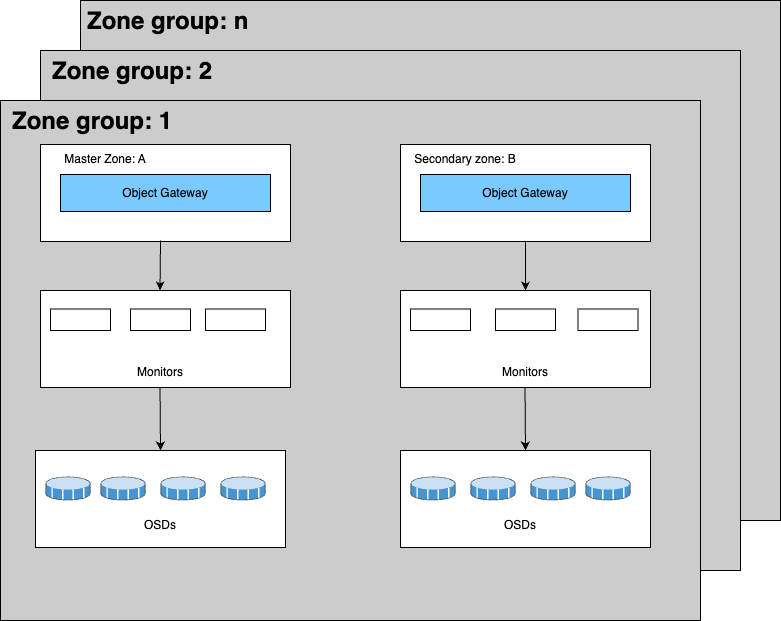

Ceph Multi-site consists of three key components:

- Zone – A Ceph cluster with its own Object Gateways (RGWs).

- Zone Group – A set of zones where data is replicated. One zone acts as the master, while others are secondary.

- Realm – The global namespace containing zone groups. One zone group is the master, managing metadata and configurations, while others are secondary.

All RGWs sync their configurations from the master zone in the master zone group, and operations like user creation must be performed there.

Procedure

Configuring Multisite RGW Deployments

Configure the Master Zone

1. Create the realm

# radosgw-admin realm create --default --rgw-realm=gold2. Create the Master Zone-Group

# radosgw-admin zonegroup create --rgw-zonegroup=us --master --default --endpoints=http://node01:803. Create the Master Zone

# radosgw-admin zone create --rgw-zone=datacenter01 --master --rgw zonegroup=us --endpoints=http://node01:8080 --access-key=12345 --secret-key=67890 --default4. Create a System User

# radosgw-admin user create --uid=sysadm --display-name="SysAdmin" --access-key=12345 --secret-key=67890 --system

5. Commit the Changes

# radosgw-admin period update --commit

6. Update the Zone Name in the Configuration Database

# ceph config set client.rgw rgw_zone datacenter01

Configure the Secondary Zone

These example steps configure the RADOS Gateway instance on the secondary zone.

1. Pull the Realm Configuration

# radosgw-admin realm pull --rgw-realm=gold --url=http://node01:80 --access-key=12345 --secret-key=67890 --default

2. Pull the Period

# radosgw-admin period pull --url=http://node01:80 --access-key=12345 --secret-key=678903. Create the Secondary Zone

# radosgw-admin zone create --rgw-zone=datacenter02 --rgw-zonegroup=us --endpoints=http://node02:80 --access-key=12345 --secret-key=67890 --default

4. Commit the Changes

# radosgw-admin period update --commit

5. Create the RADOS Gateway service for the secondary zone (If not configured yet).

6. Update the Zone Name in the Configuration Database

# ceph config set client.rgw rgw_zone datacenter02

Great! You now have a fully deployed Ceph Multisite configuration, enabling replication and synchronization across multiple Ceph clusters. Your realms, zone groups, and zones are correctly set up, and the system is now ready for cross-cluster object storage synchronization.

To verify the setup, use:

# radosgw-admin sync status

example of expected output:

realm 9eef2ff2-5fb1-4398-a69b-eeb3d9610638 (gold)

zonegroup d3524ffb-8a3c-45f1-ac18-23db1bc99071 (us)

zone 3879a186-cc0c-4b42-8db1-7624d74951b0 (datacenter02)

metadata sync syncing

full sync: 0/64 shards

incremental sync: 64/64 shards

metadata is caught up with master

data sync source: 4f1863ca-1fca-4c2d-a7b0-f693ddd14882 (datacenter01)

syncing

full sync: 0/128 shards

incremental sync: 128/128 shards

data is caught up with sourceSummary

With the Ceph Multisite configuration successfully deployed, your clusters are now synchronized under a unified geo-redundant object storage setup. By defining realms, zone groups, and zones, you’ve ensured high availability, disaster recovery, and data replication across multiple locations. This architecture provides scalable, fault-tolerant S3-compatible storage, making your infrastructure more resilient and efficient.